I would like to share with you the great work we did in the last NASA hackathon. I was invited by a friend to take her place and work with a team of 5 people on an Artificial Intelligence solution.

My team consisted of 5 people, of which: 1 was from nanotechnology, 2 were mechatronics, 1 was from Marketing and only I was the expert in Artificial Intelligence … It was difficult.

Especially because the challenge we set ourselves was to make an artificial intelligence … being me the only one who knew about the subject … and the only one who knew how to program.

We developed a methodology for consuming resources and procedures stipulated by NASA related to the safety of the crew on a mission. The standards and sensors of the ship are compared and a judgment is issued by means of a virtual assistant.

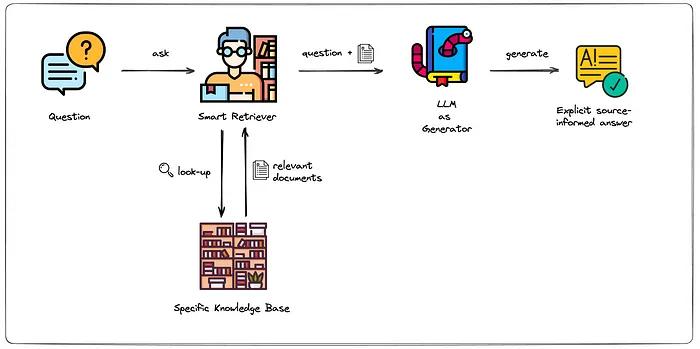

The solution contemplates the limitations of LLM-based AIs that have tendencies towards hallucination, which is inventing information.

This happens because the tools have learned grammatical patterns, which can be found in both facts and lies; The only way to discriminate what is true from what is not, is by comparing it with reality itself or with established definitions; Therefore, a solution is presented in the form of an additional layer of security that ensures that these tools are not used as sources, but as semantic translators of information.

RAG: Retreival Augmented Generation

The methodology contemplates 3 phases, which ensure the least possible consumption of resources and minimizing the risk of inventing information in the process:

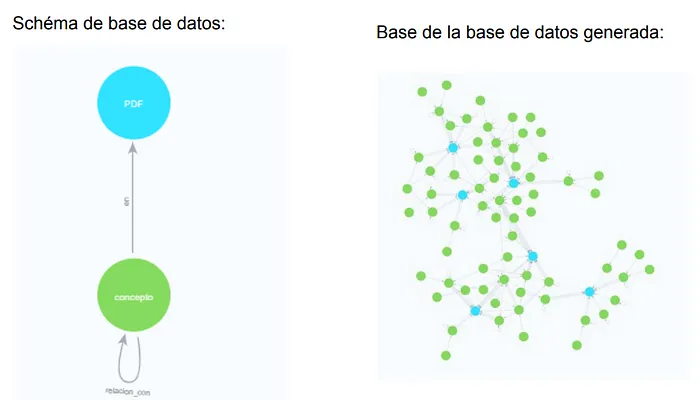

- The first stage consists of creating a map of concepts where to find information in the different PDFs that NASA uses to describe standards and processes. This concept is commonly known as “Knowledge graph”, being the solution a simplified version of it.

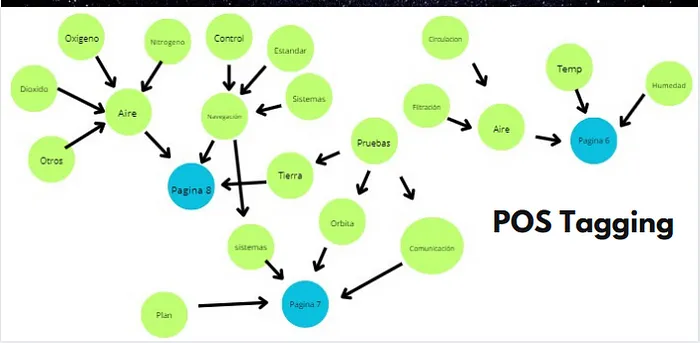

- The second stage represents the way in which an already trained language model manages to find patterns in the text within the technical and standard sheets, crossing the information of the sensors and logically discriminating whether or not the norm is being met.

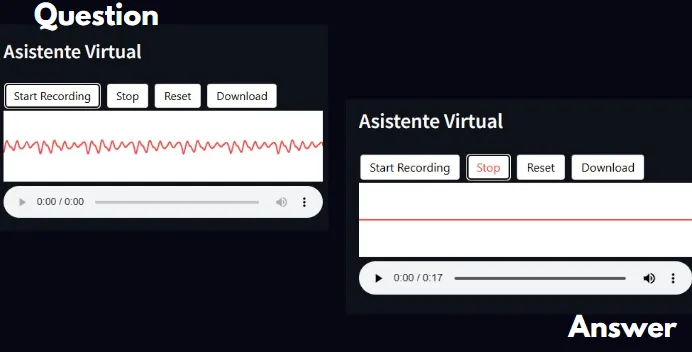

- The third phase covers the way in which the user uses the tool through an oral instruction, receiving an audio response without the need for manual interaction.

The scope allows to reduce the risk of offering erroneous data and hallucination, generates robustness to the answers, allows a review of the documents and contrast in real time with the information of the sensors in the mission.

In turn, the proposal allows integrating any technical document from NASA to be consumed by the categorization architecture, in a way that only requires a light model (10 kb) of the type “POS Tagger”, being economical in computational resources.

In turn, it allows the architecture to support scalability by introducing patterns of concepts in multiple documents and thus obtaining the exact and relevant location of the information.

If you are interested in reading all the documentation on this topic, I leave you a link so you can read it completely.